Introduction:

This report is about using a tracked drive robot to autonomously open doors via pulling. We discuss multiple perception algorithms for automatically detecting doors and associated features like the hinge and handle. We also outline some preliminary work on motion planning for the arm to grasp and pull the door open. Motion planning to open pull-type doors is more difficult than for push-type doors, particularly using a non-holonomic robot. It requires the coordinated motion of the robot’s base and two arms.

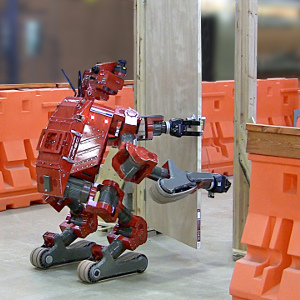

The methods discussed here were designed for the robot CHIMP (CMU Highly Intelligent Mobile Platform), a mobile manipulator designed to operate in hazardous environments [1]. The robot has multiple cameras, dual spinning LiDAR sensors, two 7-DOF arms and a differential-drive with tracks made from polyurethane. Theoretically CHIMP can make zero-radius turns, however its heavy mass means the rubber tracks don’t favor sharp turns on high-friction surfaces. For robots with a holonomic (omnidirectional) base, the motion planning problem will be easier to solve.

Related Work:

Various research work has looked at opening doors by pushing, using a robot arm on a differential-drive mobile base:

- The YAMABICO-10 robot had a 6-DOF arm and a differential-drive base [2].

- CARDEA at MIT had a Segway base and a 5-DOF arm using SEA (Series Elastic Actuators) [3].

- The STAIR (Stanford Artificial Intelligence Robot) had a 5-DOF Katana arm on a Segway [4].

- EL-E at Georgia Tech had a 6-DOF manipulator (Katana arm + linear actuator) and an Erratic-brand base [5].

Using a 5-DOF arm it’s possible to turn the door handle and push open the door without using coordinated, whole-arm motion planning [4,5]. It’s also possible to push open a door simply by driving forward, if the robot starts with its base in the correct initial position. However it’s relatively easy to make corrections to the base position because a differential-drive robot can rotate in-place. Also collision-checking is simple for robots that rotate on their center axis, within a circle. The drive wheels on the EL-E robot are offset to the front [5], with a 3rd caster wheel for balance, so a modified footprint is required for exact collision checking.

Other work has looked at pushing doors using a robot with a holonomic (omni-directional) drive:

- PSR-1 at KIST (Korea Institute of Science and Technology) had a 6-DOF arm and omnidirectional mobile base [6].

- The Milestone-2 experiment on the PR2 robot at Willow Garage, which has steerable caster wheels and a 7-DOF arm [7].

Using a robot capable of omni-directional motion makes it easy to plan movements for the base. A holonomic drive can rotate whilst driving through the door, equivalent to having an extra yaw joint in the arm. Steerable caster wheels are a bit more difficult to control and planning a smooth path is important to prevent rapid, instantaneous rotation changes by the wheels.

In this report we focus on the problem of opening doors by pulling, which is more difficult than pushing open doors.

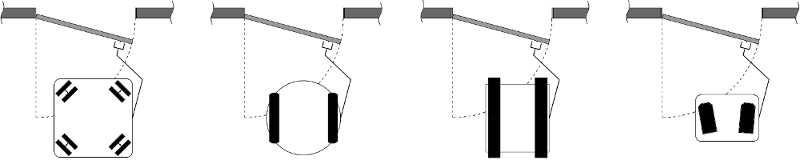

The strategy or behavior used to pull open a door is dependent on if a robot has wheels or legs, and if motion of the base is restricted in any direction (Fig. 2).

2.a: (left) Omni-directional e.g. PR2 robot.

2.b: Differential drive e.g. Segway.

2.c: Tracks or skid-steer e.g. CHIMP, PackBot.

2.d: Biped robot e.g. Atlas, HUBO.

Various work has looked at pulling open small doors like cupboards, drawers or fridges. This task can be achieved using a 7-DOF arm without moving the robot’s base, so we ignore that research in this report.

Two projects have demonstrated pulling open doors and driving the robot through:

- Later experiments using the PR2 robot at Willow Garage [8-11].

- Packbot 510 by iRobot, with tracked drive and 6-DOF total for manipulation [12].

The most complete demonstration of a fully autonomous robot capable of opening doors was with the PR2 robot [8-10]. At the time, other groups were able to download the source code and run the motion planning software of their own PR2 robot in their lab. Initially the robot opened pull-type doors using one arm [8], with the assumption that the door would not move after being released. The motion planning problem was decomposed into multiple sub-problems, to reduce the dimensionality. A graph-based planner was used to first solve for the pose of the robot’s base and door, then subsequently solve for the arm motion [8].

The PR2 can also open spring-loaded pull-type doors using a two-arm strategy [9,10]. The graph-based planning method from [8] was improved and can solve the entire motion planning problem, using a combined state space for the base and arm. The PR2 was also used to open heavy spring-loaded doors [11] that required too much opening force for the dual-arm hand-off approach used in [9,10]. The strategy employed in [11] is that after cracking the door ajar, the PR2 rotates around the door and uses its back to hold the door open, without need of the second arm.

Using a track drive robot to pull open a door is more challenging. If the arm(s) aren’t long enough then after pulling the door inwards, such that it collides with the base, the robot will need to be repositioned. The company iRobot has developed a semi-autonomous door opening system for the PackBot 510 robot using an application-specific underactuated manipulator [12]. A user-guided perception system detects the door knob or handle in the image from a 2D camera. The handle is grasped using a touch-sensitive gripper and 3-DOF in the arm. The complicated kinematics for pulling the door is simplified by using a flexible 2-DOF joint in the wrist that is electromechanically activated. A spring-loaded door can be held open using a 1-DOF flipper arm at the front of the robot, allowing the arm to regrasp the door on the inside face. A sequence of scripted motions is used to control the arm and base, similar to the approach used for the CHIMP robot in the DARPA Robotics Challenge [1].

Approach:

or industrial environments.

3.a: (top) Pull doors that are recessed (set back) from the wall.

3.b: Pull doors where the door surface is coplanar with the wall.

3.c: Double doors.

3.d: Interior doors.

This section describes our approach for detecting the door using camera and/or LiDAR, and constrained motion planning to turn the handle and pull the door open using a 7-DOF arm. Our work on combined arm-base motion planning is unfinished at this time.

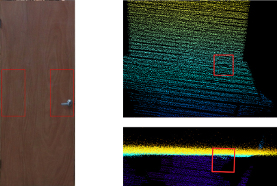

We studied various examples of pull-type doors that a rescue robot might encounter in an industrial environment (Fig. 3). All the doors are a different color or texture to the surrounding wall, which suggests that using a camera is a good approach. However, the surface of the door is typically co-planar with the surrounding wall and a LiDAR sensor will fail to discriminate the geometry. Some doors do have a raised moulding or frame surrounding the door, but various design considerations mean that pull-type doors are usually flush with the wall surface.

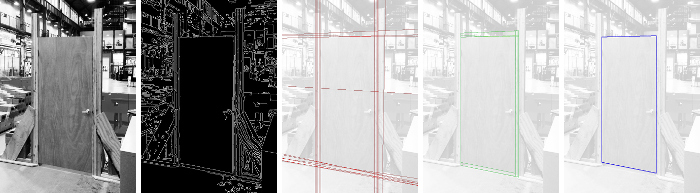

For our experiments we used the most difficult test-case, being a pull door that was the same color and flush with the surrounding frame (see Fig. 4 below). The algorithm parameters for the various modules were found using grid-search and a dataset of camera and LiDAR data.

Detecting Door Candidates:

This means detecting the position and outline of the door.

Four algorithms were tested:

1. Camera-only:

This method detects the best-fitting quadrilateral in the image (Fig. 4e). Without a 3D sensor, this does not output the pose of the door.

- Pre-processing (convert RGB image to perspective projection, histogram equalization, normalization, convert to grayscale).

- Detect edges (gaussian blur, Canny).

- Detect lines (Hough transform).

- Sort strongest lines (value in the Hough accumulator).

- Filter lines into approximately vertical lines (+/- 1°) and horizontal lines (+/- 30°).

- Keep 10+10 strongest lines.

- Generate combinations of pairs of lines (minimum separation distance).

- Generate set of candidate quadrilaterals (combinations of two vertical and two horizontal lines).

- Count edge pixels under each quad.

- Sort quads (number of edge pixels, ratio height-to-width)

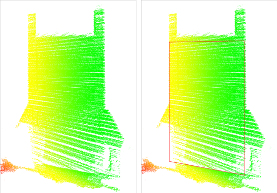

2. Camera + LiDAR:

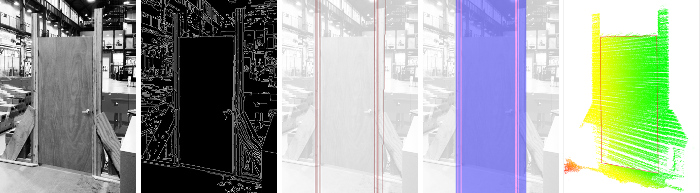

This method finds patches in the image and uses these to check sets of co-planar points in the point cloud (Fig. 5).

- Pre-processing (convert RGB image to perspective projection, histogram equalization, normalization, convert to grayscale).

- Detect edges (gaussian blur, Canny).

- Detect lines (Hough transform).

- Sort strongest lines (value in the Hough accumulator).

- Keep approximately vertical lines (+/- 1°).

- Keep 10 strongest vertical lines.

- Sort vertical lines from left-to-right in image (rho value from Hough transform).

- Using consecutive pairs of lines, define set of non-overlapping patches (vertical strips).

- Project each pair of lines into the 3D point cloud to define a square-shaped frustrum.

- Within each frustrum, find the best set of planar points using RANSAC (vertical plane, most inliers, normal vector +/- 45° towards robot).

- Sort the sets of coplanar points (largest 2D area, ratio height-to-width).

- Check that planar region is approxmately the width of a typical door, using dimensions specified by the ADA (Americans with Disabilities Act).

- Attempt to grow planar region (check neighbor regions, coplanar, separation distance, height).

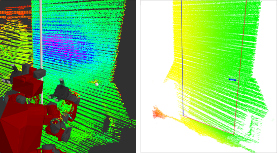

3. LiDAR-only:

An iterative method to detect all vertical planes that satisfy dimensions specified by the ADA (Americans with Disabilities Act), similar to that used on the PR2 robot in [7]. This algorithm fails to correctly detect the door outline and hinge when the door surface is coplanar with the surrounding frame (Fig. 6b).

- Downsample point cloud.

- Compute normals.

- Filter points (normal vector +/- 45° towards robot).

- Segment points into clusters using region-growing method (normal vector, point-to-point distance, maximum width, maximum height).

- Sort 3D point clusters using geometric heuristics (2D area, ratio 2D height-to-width).

6.a: Input point cloud.

6.b: The algorithm fails to detect the correct edges of the door.

4. User-guided:

This method is seeded by input from the human operator:

- Operator uses mouse to select the edge of the door with the hinge.

- Operator selects the opposite edge, with the door handle.

- The ground height is the approximate height of the robot’s tracks.

- The door height is a fixed constant.

- Crop 3D points using square-shaped frustrum.

- Find best plane using RANSAC (vertical plane, most inliers, normal vector +/- 45° towards robot).

7.a: The operator uses the mouse to click on the hinge and handle.

7.b: Successful detection of the door features.

Door Pose Refinement:

8.a: Regions-of-intest on sub-image of door.

8.b: A metal door handle in the point cloud.

This means determining the exact location of the hinge (door leaf), the handle, and the width of the door. Three algorithms were tested, numbered here to match the method of door detection.

1 or 2. Camera image:

The door handle must be located in either of two regions (Fig. 8.a), so these pixels are analyzed.

- Use corner points of detected quadrilateral to compute perspective transform.

- Warp the image.

- Generate 2 regions-of-interest, using ADA specifications.

- Detect lines and/or circles within each ROI, using Hough transform.

- The door hinge is on the opposite side.

3. LiDAR point cloud:

This looks for a cluster of LiDAR points that are offset from the door (Fig. 8.b). Unfortunately the point cloud produced by the spinning LiDAR was too sparse to get reliable detections of a reflective metal door handle. The authors of [7] had the same problem with the nodding LiDAR on the PR2 robot.

- Re-run plane fitting in the cropped region using the high-resolution point cloud.

- Generate 2 regions-of-interest, using ADA specifications.

- Check for 3D points offset from the plane, within each ROI.

- Cluster outlier points using K-means.

- The horizontal extents of that plane become the position of the door hinge.

4. Via user-input:

The human operator has already clicked on 2 points in the pointcloud. Instead of just selecting the plane, these points are used to define the door position. The first point is used as the hinge axis and the second point is used to determine the door width and handle height.

To summarize, algorithms 1 and 2 can reliably detect various types of door. Algorithm 2 (camera + LiDAR) can detect the pose of the door relative to the robot. Algorithm 3 (LiDAR-only) works for most types of door, where the door surface is offset from the door frame, however detecting all planes in an unstructured 3D point cloud took a long time to compute. Algorithm 4 (user-guided) enables almost instant detection of doors for a teleoperated robot.

The Hokuyo LiDAR has a depth accuracy of +/- 30mm, or closer to 35mm when using the dual scanner LiDAR on the CHIMP robot. We were able to learn an approximate LiDAR depth error model when the robot is facing front-on towards a wood door, but there is still a risk that the robot is too close or too far away from the door. Therefore touch-based manipulation is required to check the depth to the door and/or location of the handle. The results described in the literature show the same conclusion, that touch-based manipulation is critical for door opening.

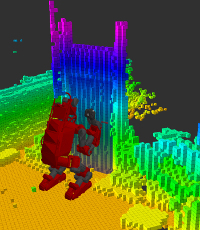

Arm Motion planning:

During motion planning, collision-checking is performed between the robot’s geometry and the environment (Fig. 9). The door is modeled using a cuboid shape, with its pose as found by the door detector. Any other objects near the door are modeled using a Voxel Grid generated from the latest LiDAR scan. The robot’s geometry is represented using multiple intersecting bounding boxes (cuboids), which is more efficient than a polygon mesh.

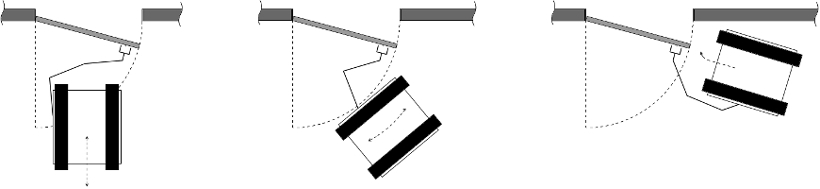

There are various strategies for opening a pull door using a robot with tracked drive (Fig. 10 below):

- A. Arms are long enough to full open the door without moving the base.

- B. The base is driven along an arc-shaped path while opening the door.

- C. Arm and base motions are interleaved.

In this work we focused on motion planning for the arm(s) which meant we could only test strategy ‘A’ in Fig. 10.

10.a: (left) If the robot’s arms are long enough, the door can be manipulated using 2 arms. The base only drives forward and backward.

10.b: (center) For a heavy door, the robot uses its left arm to ‘crack’ open the door. The base drives in an arc-shape to pull open the door.

10.c: (right) The robot uses both arms to manipulate the door. There are multiple potential sub-strategies from this state, including

pushing the door open with the front of the robot, or rotating and passing through backwards.

Results & Discussion:

At the DARPA Robotics Challenge Finals we used a simpler approach, for speed and reliability. There was some concern that using the camera image could be unreliable in an outdoor environment, with varying levels of brightness, therefore we used only the LiDAR sensor for perception. Planning the constrained trajectories for the arms was computationally-intensive and time-consuming, therefore we developed a library of scripted motion primitives. The final system comprised of a user-guided system to detect the door hinge and handle, and a scripted sequence of arm motions to manipulate the door [1]. The CHIMP robot successfully opened a push-type door, with a human operator driving the base and initiating arm motion scripts.

It is risky to use open-loop scripted motions because the robot could be too close to the door surface or too far away from the door handle. These situations mean that the robot’s arm could collide with the door or its gripper could fail to touch the handle. On day 1 of the competition, the robot was driven too close to the door and at a slight angle. When turning the door handle the robot’s forearm was pushing hard against the door surface before the latch was released. This caused the door to ‘stick’ in the frame, and when the door ‘popped’ open the robot was momentarily destabilized and rolled backwards. On day 2 of the competition, the robot was positioned too far away from the door surface. The operator tried to turn the handle 7 times, with slight adjustments in-between, until the door finally pushed opened.

Future work will be to:

- Investigate why the constrained motion planner and/or collision checking is so slow.

- Develop a combined arm-and-base motion planner.

- Compare the planning complexity and success-rate of opening pull-type doors using robots with various base types.

- Implement a more reliable method to detect the position of the door and handle, such as using force (touch) sensing or an I.R. distance sensor.

This work was conducted with J. Gonzalez-Mora and K. Strabala, at CMU and NREC.

| References: |

|

[1] G. C. Haynes, D. Stager, A. Stentz, B. Zajac, D. Anderson, D. Bennington, J. Brindza, D. Butterworth, C. Dellin, M. George, J. Gonzalez-Mora, M. Jones, P. Kini, M. Laverne, N. Letwin, E. Perko, C. Pinkston, D. Rice, J. Scheifflee, K. Strabala, J. M. Vande Weghe, M. Waldbaum, R. Warner, E. Meyhofer, A. Kelly and H. Herman, “Developing a Robust Disaster Response Robot: CHIMP and the Robotics Challenge”, Journal of Field Robotics, Vol. 34, No. 2, March 2017.

|

|

[2] K. Nagatani and S. Yuta, “Designing a Behavior to Open a Door and to Pass through a Door-way using a Mobile Robot Equipped with a Manipulator”, Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), September 1994.

|

|

[3] R. Brooks, L. Aryananda, A. Edsinger, P. Fitzpatrick, C. Kemp, U. O’Reilly, E. Torres-Jara, P. Varshavskaya and J. Weber, “Sensing and Manipulating Built-for-human Environments”, International Journal of Humanoid Robotics (IJHR), Volume 1 No. 1, March 2004.

|

|

[4] A. Petrovskaya and A. Y. Ng, “Probabilistic Mobile Manipulation in Dynamic Environments, with Application to Opening Doors”, Proceedings of the 20th International Joint Conference on Artificial Intelligence (IJCAI), January 2007.

Video: [available on request] |

|

[5] A. Jain and C. C. Kemp, “Behaviors for Robust Door Opening and Doorway Traversal with Force-Sensing Mobile Manipulator”, Robotics: Science & Systems (RSS), workshop on Robot Manipulation: Intelligence in Human Environments, June 2008.

|

|

[6] C. Rhee, W. Chung, M. Kim, Y. Shim and H. Lee, “Door opening control using the multi-fingered robotic hand for the indoor service robot”, Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), May 2004.

|

|

[7] W. Meeussen, M. Wise, S. Glaser, S. Chitta, C. McGann, P. Mihelich, E. Marder-Eppstein, M. Muja, V. Eruhimov, T. Foote, J. Hsu, R. B. Rusu, B. Marthi, G. Bradski, K. Konolige, B. Gerkey and E. Berger, “Autonomous Door Opening and Plugging In with a Personal Robot”, Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), May 2010.

Video: [available on request] |

|

[8] S. Chitta, B. Cohen and M. Likhachev, “Planning for Autonomous Door Opening with a Mobile Manipulator”, Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), May 2010.

Video: [available on request] |

|

[9] S. Gray, C. Clingerman, S. Chitta and M. Likhachev, “PR2: Opening Spring-Loaded Doors”, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), The PR2 Workshop, September 2011.

PDF: [.] Video: [available on request] |

|

[10] S. Gray, S. Chitta, V. Kumar and M. Likhachev, “A Single Planner for a Composite Task of Approaching, Opening and Navigating through Non-spring and Spring-loaded Doors”, Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), May 2013.

Video: [available on request] |

|

[11] A. Pratkanis, A. E. Leeper and K. Salisbury, “Replacing the Office Intern: An Autonomous Coffee Run with a Mobile Manipulator”, IEEE International Conference on Robotics and Automation (ICRA), May 2013.

Video: [available on request] |

|

[12] B. Axelrod and W. H. Huang, “Autonomous Door Opening and Traversal”, Proceedings of the IEEE International Conference on Technologies for Practical Robot Applications (TePRA), May 2015.

|

|

[13] D. Berenson, and J. Chestnutt, S. Srinivasa, J. Kuffner and S. Kagami, “Pose-Constrained Whole-Body Planning using Task Space Region Chains”, Proceedings of IEEE-RAS International Conference on Humanoid Robots (Humanoids), December 2009.

|

|

[14] Diagram illustrated by D. T. Butterworth, 2015.

|